Mahmud Hams / Getty

Mahmud Hams / Getty Israel’s military has reportedly deployed artificial intelligence to help it pick targets for air strikes in Gaza.

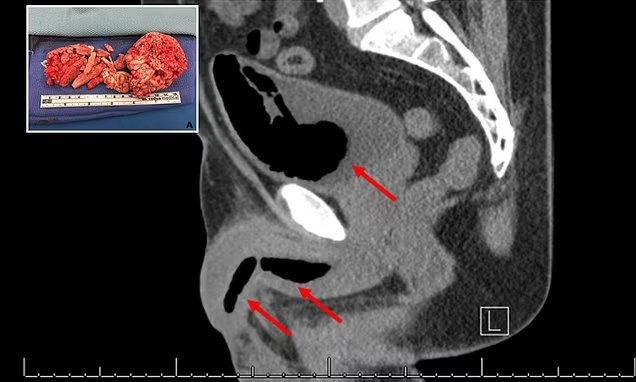

There are reasons to worry. The biggest problem with military AI, experts told The Daily Beast, is that it’s created and trained by human beings. And human beings make mistakes. An AI might just make the same mistakes, but faster and on a greater scale.

So if a human specialist, scouring drone or satellite imagery for evidence of a military target in a city teeming with civilians, can screw up and pick the wrong building as the target for an incoming air raid, a software algorithm can make the same error, over and over.

Source:

www.thedailybeast.com

1 year ago

638

1 year ago

638

English (United States) ·

English (United States) ·